1 Introduction

The newly implemented Co-Lab initiative is an innovative approach to Library and Archives Canada’s (LAC) public services programming. As such, it satisfies the Directives of Treasury Board (TB), which require departments to devote a percentage of program funds to experimenting with new approaches and measuring the impact of their programs. Departments are expected to share the results of their experiments, positive, negative or neutral/null, as broadly as possible, with a strong default to public release. The Co-Lab initiative was identified for evaluation in LAC’s 2018–2023 Departmental Program Evaluation Plan.

1.1 Purpose of the Evaluation

The purpose of the evaluation was to assess the soundness of the design of the initiative, the effectiveness of its implementation to date and the resource utilization.

2 Library and Archives Canada and Co-Lab Initiative Profiles

2.1 Overview of Library and Archives Canada (LAC)

LAC is a federal institution responsible for acquiring, preserving and providing access to Canada’s documentary heritage. LAC was created in 2004 through the merging of Canada’s National Archives (founded in 1872) and National Library (founded in 1953). The

Library and Archives of Canada ActFootnote 1 came into force the same year. It defines the institution’s mandate, as follows:

- to preserve the documentary heritage of Canada for the benefit of present and future generations;

- to be a source of enduring knowledge accessible to all, contributing to the cultural, social and economic advancement of Canada as a free and democratic society;

- to facilitate in Canada co-operation among the communities involved in the acquisition, preservation and diffusion of knowledge; and

- to be the continuing memory of the Government of Canada and its institutions.

2.2 Co-Lab Initiative Overview

Context

The Co-Lab initiative was initially inspired by developing trends in the field of Galleries, Libraries, Archives and Museums (GLAMs) and by experimental crowdsourcing projects of other Canadian and national cultural institutions (more specifically, the National Archives and Records Administration’s (NARA) Citizen Archivist application). LAC has been working toward developing a crowdsourcing tool for a number of years. In 2016–2017, LAC conducted two pilot crowdsourcing projects for the transcription of the

Coltman Report and the

Diary of Lady McDonald. The two projects generated a lot of interest from the public and exceeded the expectations of LAC staff in terms of speed of completion and quality of work by volunteer contributors. That prompted the decision to put in place a formal LAC crowdsourcing initiative.

Co-Lab was officially launched in April 2018. The purpose of the initiative is to increase the digital content of LAC’s collections, their accessibility and discoverability.Footnote 2 The initiative uses a crowdsourcing Web application through which members of the public can transcribe, tag, translate and describe digital images in LAC’s collection.Footnote 3

Users are given the option of taking on a “Challenge” devised by LAC experts, or using the new search engine “Collection Search Beta” to enable content for contribution in Co-Lab where it needs enhancing. Challenges are thematically grouped sets of records from LAC’s collection that have been digitized. They are primarily textual in nature but can also contain photographs. Metadata created by Co-Lab users becomes accessible immediately and searchable in the Collection Search BetaFootnote 4 within 24 hours. Furthermore, users have a choice to participate anonymously or to create a user profile that allows them to keep track of their contribution history.Footnote 5

Co-Lab users are provided with written guidelines on how to use the Co-Lab application and have access to a tutorial offering an in-depth tour of the “Challenges” and the types of contributions users can make. Additional help and guidance are provided through an email service desk that connects users with LAC staff. “Challenges” are grouped in themes and describe the type of materials that need enhancing, the language of the materials, and the level of completion of the Challenge.Footnote 6

Mistakes in contributions can be corrected by the users themselves or by other users. Participants are encouraged to set the status of their contributions to “Needs Review” to ensure that Co-Lab users review each other’s work and that no mistakes go undetected. In addition, LAC staff may intervene and lock entries to prevent further contributions for any reason, including those that are inappropriate. Typically, entries are only locked if both the “crowd” and LAC staff have reviewed the contribution and LAC staff want to maintain the integrity of the completed entry.Footnote 7 Finally, Co-Lab users can provide suggestions for “Challenges” to LAC staff via the Co-Lab email box.Footnote 8

Development of the Co-Lab web application

The Web application was developed internally at LAC by a multidisciplinary project team involving employees from the Public Services Branch and the Innovation and Chief Information Officer Branch (ICIOB). Prior to developing the application, the project team explored various options for its business requirements and technical functionality. The actual development of the application proceeded in three phases. The estimated timeframe was set at 13 months and was based on an incremental implementation approach. Phase 1 included the development of an administrative interface for the selection of content, a public interface for the collection of user-contributed content for images, and a database for storing user-contributed content. The administrative interface enables LAC staff to make selected images from LAC’s collection available for user contribution, while the public interface enables LAC clients/users to access the Co-Lab website, browse and contribute to the content that is presented there.Footnote 9 Phase 2 involved the development of the Co-Lab website and the search and edit functionalities that enable Co-Lab users to edit and add content on the Co-Lab Internet site and open images for contribution. Phase 3 involved the implementation of user registration and management modules, allowing users to create an account and sign into their account. A feature for supporting audio and video objects and opening those for contribution was also added.Footnote 10

2.3 Resources

The Co-Lab initiative is not a stand-alone LAC activity. As such, it does not have a separate budget and the resources dedicated to it come from the expenditures of the Public Services Branch and the ICIOB. They are presented in Table 1, below.

Table 1. Co-Lab Resources

|

CO-LAB |

|---|

|

Branch |

2016–2017 |

2017–2018 |

|---|

|

O&M |

Salary |

FTE |

O&M |

Salary |

FTE |

|---|

|

DG INNOVATION & CIO | 45,855 | 83,151 | 0.96 | 92,563 | 145,043 | 1.66 |

|

PUBLIC SERVICES BRANCH | n/a | n/a | n/a | n/a | 29,531 | 0.16 |

|

EBP (20%) | n/a | 16,630 | n/a | n/a | 34,915 | n/a |

|

Total |

45,855 |

99,781 |

0.96 |

92,563 |

209,489 |

1.82 |

2.4 Governance

As per the Departmental Results Framework (DRF), the Co-Lab initiative falls under the LAC Public Services Program. The Program enables Canadians to easily access and consult LAC’s collection and thereby increase their knowledge of Canada’s documentary heritage.Footnote 11

The responsibility for the initiative rests with the Exhibitions and Online Content Division of the Public Services Branch. Among other things, the Division is responsible for:

- developing specialized online resources to support access, including blogs, podcasts, and other online curation initiatives;

- creating online databases, research guides and digital finding aids;

- managing crowdsourcing initiatives;

- creating datasets for public use through the Government of Canada Open Data Portal; and

- developing and administering LAC exhibition projects, including the loan of LAC materials to museums and other institutions.

Decisions and approvals pertaining to the initiative are made within the Public Services Branch at the Director level.

2.5 LAC Priorities Related to the Initiative

The purpose of the Co-Lab initiative is to increase the digital content of LAC’s collections, their accessibility and discoverability.Footnote 12 It also has the following objectives:

- make LAC’s collection and holdings more accessible or usable;

- engage clients with the collection; and

- improve metadata.

Through its objectives and by making use of a crowdsourcing Web application, the initiative contributes to Priorities 1 and 4 of LAC’s Three-Year Plan 2016–2019, namely:

Priority 1: To be an institution fully dedicated to serving all of its clients: government institutions, donors, academics, researchers, archivists, librarians, students, genealogists and the general public.Footnote 13

Priority 4: To be an institution with prominent public service visibility that highlights the value of its collection and services.Footnote 14

The purpose and objective of the initiative further support the following commitments made by LAC regarding the above priorities:

- to improve access to LAC’s collection by providing innovative tools and solutions and to make more of LAC’s collection accessible in digital format through digitization initiatives, enhanced content and online research instruments;Footnote 15

- to increase the visibility of its collection through dynamic public programming and by making use of social media and social networks;Footnote 16 and

- to demonstrate innovation by experimenting with the development of a Web platform for citizen participation so the public can help to transcribe and describe LAC’s collection.Footnote 17

In addition, the initiative reflects the following service priorities and outcomes of LAC’s Strategy for Services to the Public:Footnote 18

- to serve all Canadians as well as engage and grow new client groups;

- to achieve prominent public visibility for LAC collections and services;

- to provide hands-on digitization, tagging, and transcription tools, so that researchers increasingly can work autonomously on site and online, as well as support the open sharing of information and digital content online.

3 Evaluation Methodology

3.1 Evaluation Period

This is a targeted evaluation that focused on a specific key initiative of the Public Services Program. Given that the implementation of the initiative began recently, the evaluation covered the period leading up to the initiation of Co-Lab (2016–2017) and the year of its implementation (April 2018 to March 2019).

3.2 Evaluation Questions and Methods

The following evaluation questions were used to structure the analysis:

- Is the design of the Co-Lab initiative sound?

- Is the design of the Co-Lab initiative consistent with best practices?

- How successful has the initiative been so far?

The evaluation used a mixed methods approach, combining qualitative and quantitative lines of enquiry.

Document and data reviews

Reviews of documents (e.g., internal/external reports, program documents, statistics, administrative and financial reports) and data have been identified as the method of analysis to assess the design and implementation of the initiative.

Key informant interviews

Eight key informant interviews were conducted with senior management (Director General and Director levels), the immediate management team responsible for the initiative, and employees involved in its implementation. The interview questionnaires were based on the indicators identified in the evaluation matrix and included a mix of open- and closed-ended questions. Each interview questionnaire included a series of core and customized questions based on the informant’s level of knowledge and involvement with the initiative.

Contributors survey

An online survey of Co-Lab participants was conducted. The purpose of the survey was to collect data on the experience of registered Co-Lab contributors. The survey questionnaire made use of demographic, ranking, scaling and open-ended questions. The data was used to assess the design and implementation of the initiative.

The survey was distributed to 124 registered users (at the time of the survey). The selection was purposeful because the evaluation sought to gain an understanding of this particular group and their experience of the Co-Lab initiative. According to the literature on crowdsourcing in GLAMs, regardless of the size of the overall user community, it is a small core of users who carry out the majority of the work, and demonstrate higher levels of commitment and sustained participation.Footnote 19Footnote 20Footnote 21 It was assumed therefore, that Co-Lab registered users would be more likely to exhibit these traits than anonymous users. In addition, there were concerns for over-solicitation of LAC clients because of the use of pop-up surveys on LAC’s Internet site and a pop-up tutorial on the Co-Lab’s Internet site.

The response rate was 21.77%, which is considered acceptable given the recent launch of Co-Lab.

3.3 Limitations of the Evaluation

The literature review of crowdsourcing initiatives at cultural institutions does not provide a solid definition of what constitutes success, and there are no standards for crowdsourcing that institutions are required to follow. However, the literature has identified basic design principles and best practices, which were used to evaluate the design of the Co-Lab initiative. Public participation seems to be the main indicator of achievement for crowdsourcing initiatives that has emerged so far from the literature. The extent, consistency and sustainability of public participation over time provide a valid and reliable way to assess the performance of such initiatives.

There are no published evaluation studies of crowdsourcing initiatives in cultural institutions. Therefore, the evaluation used the crowdsourcing literature to develop the evaluation matrix and criteria.

Finally, there is limited information on the budget and costing of cultural sector crowdsourcing initiatives, so no comparative analysis could be performed as to the appropriateness of resources dedicated to Co-Lab.

3.4 Coding of Findings

Evaluation findings were colour-coded to emphasize the aspects of the initiative that require special attention.

-

Green: No improvement needed

-

Yellow: Some improvements needed/Potential area for improvements

-

Red: Improvements needed/Recommendations

4 Findings

4.1 Design of the Initiative

The evaluation uses a general definition of the term “design.” For the purposes of this evaluation, “design” is defined as the act of conceiving and planning the delivery of the Co-Lab initiative. The evaluation looked at the following:

- how the idea behind Co-Lab was conceived;

- the conceptualization of the approach for the realization of Co-Lab; and

- the planning of the initiative (i.e., expected results, resources, governance and monitoring).

Does the initiative clearly identify what needs or issues it seeks to address?

Finding 1:

Co-Lab management has a clear vision of the initiative and its objectives.

Interviews with Co-Lab management revealed that there has been a willingness to develop a “user-contributed content tool” at LAC for a long time. According to management, the inspiration came from work under way at other cultural institutions, more specifically NARA’s Citizen Archivist application. Co-Lab management went on to point out that LAC’s executive gradually opened to the idea as more and more cultural institutions undertook initiatives of this type and more evidence of their merit became available.

Management consulted with other cultural institutions, such as the Library of Congress, NARA, Bibliothèque Nationale de France and some Canadian institutions that already had crowdsourcing initiatives or projects in place. They inquired about the kind of issues those institutions had encountered and how they had addressed them. Internal consultations were also held with LAC employees to solicit their feedback on having a crowdsourcing initiative at LAC.

Interviewees recounted that LAC,

Canadiana and

OurDigitalWorld signed a Memorandum of Understanding for the building of a crowdsourcing tool in 2007. The Coltman Report was subsequently used to pilot that tool. It was selected because of its historical significance and uniqueness (i.e., it is the only copy in existence and it is of particular importance to the Métis community. The Public Services Branch worked closely with the Métis community, which, according to certain interviewees, was the reason why the project was completed so quickly. That ultimately led to the development of the Co-Lab initiative in 2017, as it signalled a public appetite for such initiatives.

Co-Lab management acknowledged that, while the Coltman Report initiative was very focused and targeted a specific community, Co-Lab is quite different because it includes various materials and numerous tasks, and involves a much larger audience. At present, management’s efforts are dedicated to testing the interest of the public in participating in Co-Lab, and based on that, they intend to develop the initiative further.

The objectives of Co-Lab, according to Co-Lab management, are to:

- make LAC’s collection and holdings more accessible or usable;

- engage clients with the collection; and

- improve metadata.

Co-Lab management explained further that Co-Lab is separate from LAC’s authoritative MIKANFootnote 22 catalogue. In their view, Co-Lab is meant as a public version of the catalogue, in the sense that the keywords and descriptions are done by the public using common language (rather than standard specialized terminology) that is closer to the general public and can be picked up by external search engines like Google. According to interviewees, the advantage of Co-Lab is that it adds another layer to the descriptions of the LAC collection, thereby enhancing it and making it easier to search.

The expectations of Co-Lab management for the initiative in the short term were to launch the Co-Lab application, get the public involved, improve the functionality of the application, and increase the level of description of the LAC collection thereby improving its searchability. In the long term, management intends to continue to improve and enhance the functionality of the application.

Is the design of the Co-Lab initiative documented properly?

Finding 2:

The conceptualization of Co-Lab and key strategic aspects (such as vision, governance, roles, concepts) were not documented sufficiently.

The documentation of the conceptual and strategic components of the initiative is fragmented and insufficient. There is no overarching document that:

- provides an overview of management’s vision for the initiative and what it intends to accomplish;

- demonstrates the reasoning behind the selected implementation approach;

- details the governance of the initiative;

- outlines the roles of the various stakeholders;

- defines key concepts and terminology; and

- defines what constitutes success and how it will be measured.

All of the above components are dispersed in various documents, such as the Departmental Plan, PowerPoint presentations, and on Co-Lab’s website, and in some cases, they are not explicit. In addition, the governance of the initiative and the process for selecting and preparing Co-Lab Challenges are not sufficiently documented.

Management and staff interview data also indicated that performance measures are still under development and that minimal performance data is being collected. This is understandable considering that the initiative is at the early stages of its implementation.

Documenting the strategic thinking that drives Co-Lab involves more than just the IT business requirements for the development of the Web application. It provides a crucial source of data and evidence that enables the assessment of the initiative’s progress and the attainment of results, as the initiative evolves. Therefore, Co-Lab management needs to address this gap.

Recommendation 1:

As the initiative evolves, document the strategic thinking around Co-Lab and its future directions.

Is the design of the Co-Lab initiative sound and consistent with best practices?

Finding 3:

Co-Lab’s design is consistent with best practices and key crowdsourcing design principles in cultural institutions.

To determine the soundness of Co-Lab’s design, the evaluation examined its consistency with key crowdsourcing design principles identified in the literature on crowdsourcing in cultural institutions. The analysis is based on several studies by experts in the field. The studies draw on a variety of sources, such as first-hand examinations of crowdsourcing projects at various cultural institutions, surveys of volunteers/participants and staff, and reviews of crowdsourcing practitioners. The key design principles identified in the studies fall under the following three categories:

- definition of purpose;

- definition of roles; and

- user community relations.

Definition of purpose

Finding 4:

Co-Lab’s design clearly articulates the purpose of the initiative and the outcomes it creates for LAC, the Co-Lab users and the Canadian public.

According to the literature, crowdsourcing initiatives/projects need to do the following to generate interest and participation:Footnote 23Footnote 24Footnote 25

- articulate their purpose clearly;

- demonstrate how their purpose satisfies the mission and goals of their creating cultural institution; and

- define the value they create for all of their stakeholders (i.e., the institution, the participants and the audience/public).

Co-Lab’s documentation demonstrates a clear and logical connection between the purpose of the initiative and the overall mission and priorities of Library and Archives Canada. It was further demonstrated and discussed in section 2.4 of this report.

Value Co-Lab creates for LAC

Co-Lab documentation and interviews with Co-Lab management and staff clearly indicate that the value the initiative seeks to create for LAC is to enrich the description of LAC’s collection and holdings.

Value for participants

Documentation and interview data indicate that the intended value Co-Lab creates for users is in providing them with an opportunity to immerse themselves in Canadian history and to have a more intimate experience of Canada’s documentary heritage. It also provides an opportunity for users to utilize their skills. The Co-lab user survey revealed that the motivations of users for becoming involved in Co-Lab are consistent with the value the initiative envisioned to create for users and are indicative of the value contributors derive from participating in Co-Lab. Respondents indicated the following motivations:

- enjoyment of working with archival/historical documents;

- desire to contribute to Canada’s documentary heritage and to give back to LAC;

- making the collection discoverable for others;

- interest in history, library science, archival science and genealogy;

- desire to improve skills; and

- curiosity.

Value for the Canadian public

Document review, interview data and evaluation team observations of the Co-Lab functionality reveal that the outputs of Co-Lab activities (i.e., transcriptions, translations, tags, and descriptions) have wider benefits for all users of LAC’s collection as they provide richer access to content. For example, the public and researchers can have access to the original digitized record, its transcribed content and its translation. In addition, transcription allows every part of a handwritten document to become discoverable through keyword searching and it also facilitates translation and data mining. Furthermore, individuals with visual impairment or who cannot read cursive text, have access to the transcribed and translated content. Moreover, the tags created by Co-Lab users allow the public to search the collection using common language keywords.

Definition of roles

Finding 5:

In general, the role of users is clear; however, certain aspects of it need to be clarified further.

According to the literature, crowdsourcing initiatives or projects should be open, clear and specific about the type of participation expected from contributors, how the institution will use their work, and what rights (if any) they have regarding the products of their work. This helps users to determine whether the project/initiative is right for them and whether it meets their particular participatory needs.Footnote 26Footnote 27Footnote 28

In addition, the literature highlights the importance of having clear and explicit guidelines or information about user behaviour and acceptable content as they influence the types of users the initiative would attract and set the tone of interaction for the user community.Footnote 29 Moreover, that demonstrates respect for participants, the time they invest, the effort they put in, and their abilities. It also establishes trust between the user community and the institution.Footnote 30Footnote 31

There appear to be no explicit guidelines that define and direct Co-Lab user behaviour. There are indirect references regarding user behaviour in the Questions and Answers section on the Co-Lab website that encourage users to delete content they believe is inappropriate and specify that Co-Lab content can be locked by staff when inappropriate contributions are detected.Footnote 32 However, there is no definition of what constitutes an inappropriate contribution. More specifically, there is no definition of acceptable and unacceptable use, violation of acceptable use and consequences for violation. In addition, there are no guidelines or information regarding LAC’s handling of Co-Lab user’s work or regarding user’s rights to their work.

Consideration for action 1:

Define and document what constitutes an “inappropriate contribution.” Implement clear guidelines for user behaviour and for treatment of violations.

User community relations

Finding 6:

The Co-lab user community needs more/better support.

According to the literature, the relationship between the project team and the users affects the level of participation, the motivation and the quality of contributions of users.Footnote 33Footnote 34 Therefore, initiatives should be designed in a way that promotes the creation and sustainability of a vibrant community. To that end, effective support mechanisms should be put in place.Footnote 35Footnote 36Footnote 37 Support mechanisms could include tools, instructional/guidance materials, communication/interaction platforms, acknowledgement, recognition/an awards system, etc., or any combination thereof.

Co-Lab has developed a variety of tools to support its users. There is an interactive tutorial, which provides an overview of the Co-Lab application and its functionality. Staff have also developed a “Questions and Answers” section on the Co-Lab’s website, providing general information on common questions and concerns that users might have. In addition, staff have developed guidelines and instructions on how to perform the main Co-Lab tasks, namely: transcription, translation, tagging and description. There is an additional task, reviewing user’s contributions, which, however, is not clearly identified as such and is not displayed with the same prominence as the other tasks. Apart from a brief reference in the Questions and Answers section of the Co-Lab website, there is no definition of what the task entails and there are no specific guidelines or instructions on how to carry out that task. Further assistance is available to contributors via the electronic mailbox on Co-Lab’s website and via the reference staff at LAC’s public service area at 395 Wellington in Ottawa.

In addition to effective support mechanisms, the literature identifies a number of design components that should be taken into consideration when developing a crowdsourcing initiative. They are important to have because they build the confidence of users, ensure the best utilization of the user’s knowledge, time and skills, and encourage consistency.Footnote 38Footnote 39Footnote 40 Moreover, they ensure more satisfying user experience, contribute to a sense of achievement and encourage continued participation. Table 2 below lists the components and demonstrates how they have been reflected in the design of Co-Lab.

Table 2: List of Crowdsourcing Design Components and Their Applications in Co-Lab

|

Design components for supporting users in crowdsourcing initiatives (literature) |

Co-Lab design components for supporting users |

|---|

| Contributors need to be able to see the result of their contribution within a reasonable timeframe and contribute at their own convenience with minimal effort. | Co-Lab users see the results of their contribution to “Challenges” immediately, and any metadata they contribute becomes searchable via LAC’s search engine “Collection Search Beta” within 24 hours; contributors can work at their own pace and on their own time from anywhere, anytime. Under the title of each Co-Lab Challenge, “percentage status indicator” demonstrates the overall results. |

| Contributors should be provided with options regarding the tasks they perform (i.e., starting new ones or reviewing submitted tasks), and be able to refer to their previous contributions. | Co-Lab users have the option to contribute anonymously or to create a user profile. Through their profile, users can refer to the history of their contributions. Users have the option to contribute to structured activities, such as a “Challenge,” to start new ones via the “enable for contribution” feature of the “Collection Search Beta” search engine, and to review the contributions of other users. |

| Tasks that need to be started, completed and/or reviewed should be clearly identified. | The status of each task for each object in a “Challenge” is clearly identified and users can set the status to one of the following categories: “Not Started”; “Incomplete”; “Needs Review”; and “Complete.” The status of each task helps users identify the type of actions they can perform concerning each task. |

| Task instruction should be concise, easy to follow, and sufficiently detailed to enable contributors to complete the task efficiently and effectively. It should be delivered in various formats that make use of automatic displays and that are easy to navigate. | Instructions on how to complete the Co-Lab tasks and how to use the application are provided in the form of detailed guidelines, a tutorial and Questions and Answers. |

| Contributors should be able to navigate the crowdsourcing site with ease and/or minimal assistance from staff and see the status of the tasks to which they are contributing. | The structure of the Co-Lab application guides users through the completion of the necessary tasks associated with each Challenge. The application makes use of electronic forms, templates, dialogue boxes, interactive menus, etc., to facilitate ease of use. The task status indicates to users what actions are needed for each object. A “percentage status indicator” under each Co-lab Challenge signals to users the progress toward its completion. |

Co-Lab user survey data revealed that most of the respondents have read the Co-Lab guidelines and have used the tutorial. Overall, respondents indicate that they have had a positive experience with the guidance tools available to them. Users describe them as useful, clear, simple, and easy to follow and use. In addition, survey data indicated that, overall, respondents can easily use and navigate Co-Lab, and are able to follow the progress of the Challenges to which they are contributing. However, some users report having difficulties with the guidance tools and navigating and using the Co-Lab application. For example, some users report feeling uncertain whether they are revising their own contribution or those of others. Other users report having difficulties with the translation functionality (i.e., there is no side-by-side view, and going back and forth between two separate windows makes it difficult to keep one’s place in the text).

Few respondents reported a need to communicate with Co-Lab staff to obtain help and clarification. Those who sought assistance indicated that their interaction with staff was positive, and that staff were professional, courteous and had responded in a timely manner.

The literature mentioned that another way of providing effective support to users and ensuring good relationships with them is by conveying a sense of community. Typically, this is done by supporting user interaction, by acknowledging the participation of users, by being attentive to regular users, and by encouraging users to share content to which they have contributed with others outside the community.Footnote 41Footnote 42 Those factors have proven to influence the motivation of certain contributors for participating and more specifically for the continuation of their involvement in a project or initiative. Moreover, interactive tools, such as discussion forums or posting platforms, could facilitate community self-management and have been used by some cultural institutions as a mechanism for user self-moderation and coordination.Footnote 43

As indicated by evaluator observations, document review and interviews with Co-Lab management and staff, Co-Lab does not currently have the functionality to enable direct interaction between its users, and the interaction between staff and users is very limited. Co-Lab staff clarified that their dealings with users involve primarily answering questions or providing assistance via the Co-Lab electronic mailbox. Data from the Co-Lab user survey confirms that users have not interacted with each other, and some respondents explicitly stated that they prefer not to interact. Interestingly, certain respondents indicated that they have had positive interactions with other users, although they do not provide any details as to the method they used to communicate with each other. There are also some respondents who indicated a strong desire for interaction with fellow users and for having a dedicated interactive space on the Co-Lab website.

Interviews with Co-Lab management and staff revealed further that Co-Lab does not have formal mechanisms in place for acknowledging users, or for enabling users to share Co-Lab content. Regardless of that, respondents to the Co-Lab user survey indicated that they tell others about Co-Lab, and few confirmed that they are creating links between their Co-lab contributions and their social media accounts.

Consideration for action 2:

More support should be made available to the user community to ensure that it has what it needs to collaborate and manage itself effectively.

Has the initiative defined what constitutes success and how it will be measured?

Finding 7:

Co-Lab management has identified elements that contribute to the success of Co-Lab; however, success is not defined and measured.

Co-Lab management identified a number of elements, which in their opinion contributes to the overall success of the initiative. They appear to form two broad categories: operational and user related (see Table 3, below). However, it is not clear what constitutes a success for the initiative.

Table 3. Co-Lab Success Elements Identified by Co-Lab Management

|

Co-Lab success elements identified by Co-Lab management |

|---|

|

Operational |

User related |

|---|

- continuous support from LAC

- good technological performance

- improved search functionality of the database

- content

- commitment and respect

- good collaboration between Public Services Branch and ICIOB

| - user participation

- obtaining client suggestions for Challenges

- making use of client feedback

|

Interviews with Co-Lab management indicated that currently they do not have any metrics for measuring success but intend to develop them. At present, they are primarily measuring the interaction of the users with the Co-Lab application.

Recommendation 2:

Define and document what success for the initiative is and how it will be demonstrated.

4.2 Effectiveness of Implementation

For the purposes of this evaluation, “implementation” is defined as the process and means by which the Co-Lab initiative was put in place and by which it is administered. This includes the deliverables of the initiative and any preliminary results or indication of result attainment.

According to the literature, physical participatory platforms do not need to be designed behind the scenes. It is more beneficial if they are released unfinished and the functionality is developed and adapted based on how participants respond to the platform, their interaction with it and the nature of their experience with it. The intention behind such an approach is continuous improvement based on use (i.e., the more it is used, the better it gets) at the core of which is a built-in expectation that the project or initiative itself will change and develop over time as a result. The advantage of this type of design approach lies in its ability to provide a real time feedback loop that can generate valuable design insights and lead to improved participant experience.Footnote 44

Interviews with Co-Lab management and staff revealed that the initiative uses an iterative development approach to ensure maximum flexibility and adaptability. As demonstrated by the document review, the development of the Co-Lab application proceeded initially in three phases, each of which involved extensive user testing. Interviews indicated that improvements to the current functionality of Co-Lab and adding new functionality are being considered, based on feedback from users.

How is the implementation of the initiative monitored?

Finding 8:

Co-Lab monitoring is limited and performance metrics are still under development.

Document review and interview data revealed that currently Co-Lab’s monitoring is limited and performance metrics are still under development. According to Co-Lab management, there is no robust reporting system in place yet and they need to develop a better understanding of their users. They pointed out further that the Co-Lab staff monitor participation in Challenges and have a cursory review of the content contributed by users. The content itself is not monitored and staff only take action if they are alerted about inappropriate language.

Co-Lab staff indicated that priority was given to the development and launching of the Co-Lab application and that they are now in the process of defining how they will measure the success of the initiative and its performance. There is a limited number of performance indicators on which they are reporting. Feedback from the public is collected through the Co-Lab email box. In addition, staff indicated that Co-Lab has not reached sufficient maturity and the functionality of the application is under continuous development. The application was initially tested in closed circle, but since its public release staff are getting more feedback on its performance and the improvements needed. They have made small adjustments to address issues reported by users, but the overall concept has not changed.

Recommendation 3:

- Ensure that the reporting system currently being developed identifies meaningful performance measures that include output and outcome indicators.

- Ensure that consistent performance data is gathered as the initiative evolves to ensure that progress toward expected results can be demonstrated over time.

- Document the rationale for any major changes to performance measurement.

4.3 Results Achieved So Far

Because of the innovative and experimental nature of the initiative, and considering that it was launched in April 2018, the evaluation only looked at the extent to which the immediate outputs of the initiative have been put in place. Therefore, the evaluation examined the:

- Web-application launch;

- rate of completion of Co-lab Challenges; and

- response and rate of participation from the public.

Public participation and rate of completion of Co-Lab Challenges

Finding 9:

There is a good level of public participation in Co-Lab and the rate of completion of Co-Lab tasks is positive.

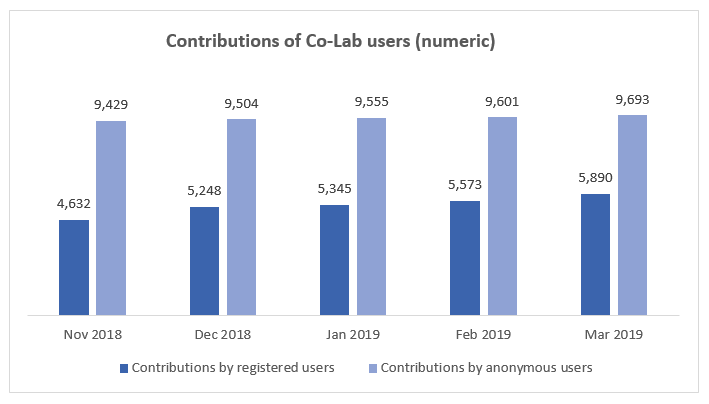

Overall, Co-Lab statistics demonstrate that the number of registered users has been growing steadily from 125 in November 2018 to 148 in March 2019. Statistics further demonstrate that the number of contributions has also been growing (see Figure 1, below). It is interesting to note that anonymous contributions are considerably higher in number than the contributions made by registered users.

Figure 1. Contributions of Co-Lab UsersFootnote 45

Text version: Figure 1

Figure 1 presents data on the number of contributions made by Co-Lab's registered and anonymous users per month from November 2018 to March 2019.

Contributions of Co-Lab Users

| | Nov 2018 | Dec 2018 | Jan 2019 | Feb 2019 | Mar 2019 |

| Contributions by registered users | 4,632 | 5,248 | 5,345 | 5,573 | 5,890 |

| Contributions by anonymous users | 9,429 | 9,504 | 9,555 | 9,601 | 9,693 |

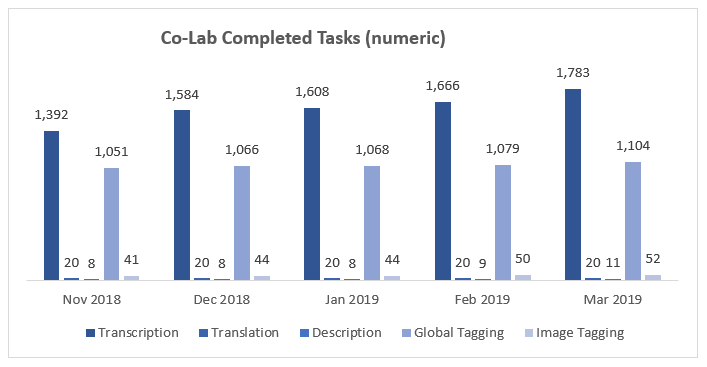

There is also an observable positive trend with regard to levels of completion of various Co-Lab tasks,Footnote 46 which demonstrates the extent of participation of users. The data demonstrates that the Transcription and Global Tagging tasks in particular have the highest rates of completion, while the rates of completion of the remaining tasks have remained stable (see Figure 2, below).

Figure 2. Co-Lab Completed TasksFootnote 47

Text version: Figure 2

Figure 2 presents data on the number of completed Co-Lab tasks per month from November 2018 to March 2019 and by task category, namely: Transcription, Translation, Description, Global Tagging and Image Tagging.

Co-Lab Completed Tasks

| | Nov 2018 | Dec 2018 | Jan 2019 | Feb 2019 | Mar 2019 |

| Transcription | 1,392 | 1,584 | 1,608 | 1,666 | 1,783 |

| Translation | 20 | 20 | 20 | 20 | 20 |

| Description | 8 | 8 | 8 | 9 | 11 |

| Global Tagging | 1,051 | 1,066 | 1,068 | 1,079 | 1,104 |

| Image Tagging | 41 | 44 | 44 | 50 | 52 |

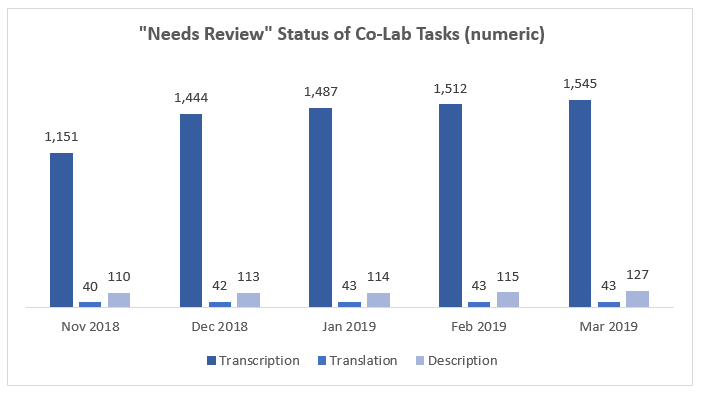

Another interesting emerging trend from Co-Lab statistical data is that the volume of transcription tasks, whose status was set to “Needs Review,” has been steadily increasing since the launch of the initiative and is closely proportionate to that of completed transcription tasks (see Figure 3, below).

Figure 3. “Needs Review” Status of Co-Lab Tasks

Text version: Figure 3

Figure 3 presents the data on the number of Transcription, Translation and Description Co-Lab tasks whose status was set to "Needs Review" by Co-Lab users. The data is presented by month from November 2018 to March 2019.

“Needs Review” Status of Co-Lab Tasks

| Nov 2018 | Dec 2018 | Jan 2019 | Feb 2019 | Mar 2019 |

| 1,151 | 1,444 | 1,487 | 1,512 | 1,545 |

| 40 | 42 | 43 | 43 | 43 |

| 110 | 113 | 114 | 115 | 127 |

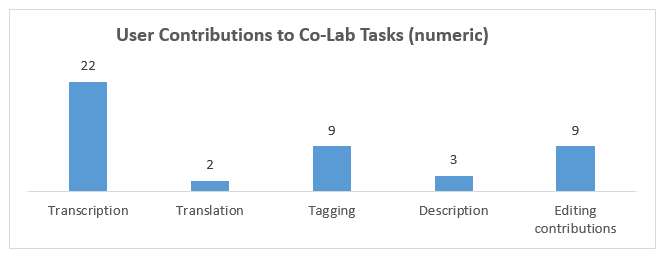

The Co-Lab user survey, conducted by the evaluation team, offers some insight into that trend. It asked registered users to identify to which Co-Lab tasks they have contributed. A category was given to each Co-Lab task (i.e., “Transcription,” “Translation,” “Tagging,” and “Description”). The evaluators included an “Editing contributions” category to identify the additional task available for users to perform. This was necessary to fill a gap in the data since Co-Lab’s documentation does not categorize the editing of user contributions as a distinct task and no data is collected to determine whether Co-Lab users actually perform it. Thus, data from the Co-Lab user survey (see Figure 4, below) indicates that only a small number of respondents edit Co-Lab contributions.

Figure 4. User Contributions to Co-Lab TasksFootnote 48

Text version: Figure 4

Figure 4 presents the data on the number of user contributions to the following Co-Lab tasks: Transcription, Translation, Tagging, Description and Editing Contributions.

User Contributions to Co-Lab Tasks

| Transcription | 22 |

| Translation | 2 |

| Tagging | 9 |

| Description | 3 |

| Editing contributions | 9 |

Respondents appear to perform “Transcription” tasks the most, followed by “Tagging” tasks, and to contribute less to “Translation” and “Description” tasks. However, due to the sampling methodology used in the Co-Lab user survey, it is not possible to draw firm conclusions whether the overall Co-Lab user population exhibits the same tendencies.

The trends emerging from the Co-Lab statistical data and the Co-Lab user survey data could be taken as an indication of a potential issue with the reviewing and editing task functionality and task conceptualization (i.e., the actual reviewing and editing of user contributions is not captured and users cannot indicate that they have performed these actions). However, further investigation into those trends would be needed to establish the materiality of the issue, its exact nature and extent.

The experience of Co-Lab staff

Finding 10:

Co-Lab staff report having a positive experience with the initiative so far.

Staff interview data indicates that staff have had a positive experience with the initiative so far. They appreciate its innovative and experimental nature, as it introduces a new way of looking at clients through an approach not typical of LAC.

The most rewarding part for Co-lab staff was the collaboration between the Public Service Branch and the ICIOB throughout the planning, development and launch of the Co-Lab application. It was a true collaboration in the sense that both teams were equally implicated in all phases of the project. It presented an important learning opportunity and a different way of doing things. Launching and seeing the application work, the amount of interest in Co-Lab, and the appreciation shown by LAC’s senior management were also a source of staff satisfaction.

The most challenging aspect for some Co-lab staff was the process for resource planning and allocation. According to them, IT projects are not planned for on an ongoing basis. Since Co-Lab is not a project, but rather a product, it requires product management, which is very different from project management and makes it particularly challenging. It requires an agile development approach that involves ongoing planning, testing and adjustment of requirements. Staff had to deal with the following main issues:

- incorporating user feedback;

- planning for agile development;

- tackling Co-Lab in the context of other priorities; and

- budget allocation.

The experience of Co-Lab users

Finding 11:

Co-Lab users reported having a positive experience with the initiative so far and intend to continue their participation.

Data from the Co-Lab user survey revealed that, in general, respondents have had a positive experience with Co-Lab (see Table 4, below). The increasing number of Co-Lab users and the growing amount of their contributions since the launch of the initiative further support this finding. Overall, respondents to the survey indicated that Co-Lab has enabled them to discover new parts of the LAC collection and has increased their interest in exploring it more.

Table 4. Experience of Co-Lab Users (numeric, N=27)

|

Co-Lab has enabled me to discover new parts of the LAC collection. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 14 | 8 | 2 | n/a | 1 | n/a | 2 |

|

Co-Lab has increased my interest in exploring more of the LAC collection. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 14 | 7 | 4 | n/a | n/a | 1 | 1 |

|

Co-Lab has enhanced my experience with the LAC collection. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 17 | 7 | 3 | n/a | n/a | n/a | n/a |

|

Co-Lab has strengthened my trust in LAC as a custodian of Canada’s documentary heritage. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 14 | 6 | 4 | n/a | n/a | 1 | 2 |

|

Co-Lab has enhanced my feeling of ownership and responsibility toward Canada’s documentary heritage. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 14 | 7 | 3 | n/a | n/a | 1 | 2 |

Respondents also affirmed that Co-Lab has:

- enhanced their experience of the collection;

- enhanced their feeling of ownership and responsibility toward Canada’s documentary heritage, and

- strengthened their trust in LAC.

The only critique made by some users is about the lack of French content.

Respondents indicated that they are sharing their Co-Lab experience and are encouraging others to get involved (see Table 5, below). Few respondents stated that they are creating links between their Co-Lab contributions and their social media accounts. Users also expressed strong intentions to continue their participation in the initiative.

Table 5. Co-Lab Users Share Their Experience (numeric, N=27)

|

I tell others about Co-Lab. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 15 | 7 | 3 | 1 | n/a | 1 | n/a |

|

I create links between my Co-Lab contributions and my social media accounts. |

|

Strongly agree |

Somewhat agree |

Neither agree nor disagree |

Somewhat disagree |

Strongly disagree |

Do not know |

Not applicable |

| 1 | 1 | 3 | n/a | 9 | 2 | 11 |

Co-Lab Challenges

Overall, users have positive opinions of the Challenges and describe them as interesting, insightful and stimulating. However, certain respondents would like Challenges to be added more frequently, to have more French content and to be more engaging. Evaluators’ observations confirmed that out of the eight textual Challenges currently available on Co-Lab’s website, only two have French content. Some respondents expressed interest in having more Challenges that include letters and personal documentation, and that are related to the Maritime Provinces or Acadia.

The “Edit or contribute using Co-Lab” feature of Collection Search Beta

Opinions of survey respondents on the “Edit or contribute using Co-Lab” feature of Collection Search Beta are somewhat mixed. Some respondents have had a positive experience with it, while others find it confusing and have had a hard time using it. Certain users are not aware of the feature and a few indicate they have not used it.

User suggestions for improvements to Co-Lab

Respondents to the Co-Lab user survey had a number of suggestions for improvements to Co-Lab (see Table 6, below). They roughly fall into two categories: improvements to the functionality of the Co-Lab application and improvements to the communication/promotion of the initiative.

Table 6. User Suggestions for Improvements to Co-Lab

|

Improvements to the functionality of the Co-Lab application |

Improvements to the communication/promotion of Co-Lab |

- adding an image rotation function

- enabling an export PDF copy of material with transcription in the text layer function

- having controlled vocabulary and standardized terminology

- better image quality/clarity in zoom mode

- larger boxes for transcription and translation

- simultaneous status display of all tasks

| - more publicity about Co-Lab

- use of notifications to keep contributors interested in participating

|

Several respondents referred to a specific technical issue with the platform. They reported having difficulty with the save function of transcription tasks, namely, after saving their work, they were transferred to the beginning of the document they were transcribing instead of back to the place where they were working. Users reported this issue as time-consuming and frustrating.

4.4 Use of Resources

There are no known best practices in terms of the amount of financial and Full-Time Equivalent (FTE) resources dedicated to crowdsourcing initiatives. Information on cost estimation used by other cultural institutions is not available. Emerging trends from the literature indicate that the resources dedicated to such initiatives tend to range between 1.0 FTE and 9.0 FTEs.

The use of resources for the purposes of this evaluation is defined as the number of planned and actual FTEs. Other aspects of resource utilization include workload of staff, tools and support available to staff, and mechanisms for ensuring quality control.

Resource planning and estimation

Finding 12:

The initiative has used its dedicated resources as intended.

Co-Lab management indicated that there is no separate budget for Co-Lab and that resources are expressed in terms of salary dollars, FTEs and time. Costs were estimated in terms of cost for development of the Co-Lab application. Planning and monitoring of resource utilization is done as part of the overall planning and monitoring for the Public Services Branch and the ICIOB. Because the initiative is integrated in the operations of both Branches, it is managed as an operational activity rather than as a separate project. For that reason, financial coding was inconsistent and sufficient information could not be obtained to allow for a more detailed analysis of resource planning and utilization.

Staff workload and support

Co-Lab staff on the business side indicated that their workload in relation to Co-Lab is heavy, while IT staff indicated their workload was within the norm. Co-Lab management indicated that no specific support in terms of training is provided to employees, and that employees were deemed to have the experience and competencies to carry out the work. Some training was provided to Reference Services staff to enable them to answer questions about Co-Lab and its operation. Interviewees indicated that internal support within the respective Branches is available to Co-Lab employees.

Staff indicated that their primary source of support is from the partners of the ICIOB. They also pointed out that, due to the innovative nature of the initiative, it is difficult to have pre-established staff, tools or training.

Quality control

Finding 13:

Co-Lab has reasonable measures for quality control that are consistent with the crowdsourcing practices of other cultural institutions.

Co-Lab management and staff make a distinction between quality control of content (i.e., the physical contributions of Co-Lab users) and quality control of format (i.e., Co-Lab Challenges and technical aspects of the application).

Quality control of Co-Lab user contributions

Interviewees pointed out that as a crowdsourcing initiative, Co-Lab is meant to be open, meaning that anyone can edit the content of contributions. In addition, Co-Lab is meant to be “self-moderating,” “self-monitoring,” and “self-correcting”/“self-fixing.” Management pointed out that initially they had considered having a 24-hour waiting period to give staff the time to review user-contributed content before it became available publicly; however, this was not feasible due to anticipated high and increasing public participation rates. Co-Lab management stated that the quality control is ensured through the Co-Lab Challenges (i.e., ensuring that there are enough Challenges, and that they are appropriate and generating public participation). Management also ensures that feedback and comments of users are taken into account.

Co-Lab staff confirmed that currently there is no formal business process for systematic quality control and identification of inappropriate content and that they are not exercising any active form of quality control. They indicated further that according to a consultation they held with other cultural institutions, a formal quality control is not a common practice. However, staff are not excluding the possibility of doing spot checks in the future. Staff pointed out that inappropriate user contributions are identified by contributors directly or by the public via the Co-Lab email box and action is taken accordingly. Interviewees pointed out further that there are mechanisms in place to allow them to identify and track users who make inappropriate contributions, and in extreme cases where there have been persistent inappropriate contributions, to prevent users from contributing by locking the content and blocking the user.

Evaluators’ observations confirmed that Co-Lab’s practices are consistent with those of other cultural institutions. For example, both NARA and the Library of Congress do not formally monitor user contributionsFootnote 49Footnote 50 and they only intervene to remove content that is identified as inappropriate in their user policies.Footnote 51Footnote 52

Quality control of Challenge preparation and application development

Interviews with Co-Lab management and staff indicated that the main responsibility for the selection and preparation of Challenges rests with the Public Services Branch and the Co-Lab Project Manager. Challenges are developed around Canadian commemorative events or LAC events, but could also be based on suggestions from LAC experts and the public. Typically, once a theme has been selected, staff look for appropriate material in LAC’s collection and decide on the types of tasks that will be incorporated (transcription, tagging, etc.). The theme is chosen based on criteria such as relevance, novelty or trendiness, and accessibility. In addition, selection of material for inclusion in a Challenge needs to meet the following requirements. It has to be in “.jpg” format, indexed and available in Collection Search Beta, available online, open for access and copyright-free. Preparation times vary and could take between two weeks and up to a few months depending on the nature and complexity of the Challenge and the state of the material. According to interviewees, basic ideas for Challenges are approved internally within the Public Services Branch but no approval from the Director General is required. In certain cases, additional approvals from other Branches might be necessary on certain aspects of Challenge preparation (e.g., promotion and/or communication).

Quality control of the technical aspects of the application development is ensured through internal approval and monitoring processes and ongoing user testing of the application’s functionality.